I. Introduction

Large Language Models (LLMs) are pushing the boundaries of artificial intelligence, enabling human-like text generation and the understanding of complex concepts. However, training these powerful models requires immense computational resources. This document explores the realm of distributed training, empowering you to leverage multiple GPUs efficiently to train LLMs using Slurm on Metal Cloud.

1. Purpose

This document presents a proof of concept (PoC) for developing and training Large Language Models (LLMs) utilizing open-source tools. The setup is designed to easily adapt to various frameworks that support distributed training and aims to streamline debugging process.

2. Context: Why Training LLMs Requires a Multi-Node (Cluster) Setup?

Large Language Models (LLMs) have significantly advanced artificial intelligence, particularly in the field of natural language processing. Recent models such as GPT-2, GPT-3, and LLaMA2 can understand and generate human-like text with impressive accuracy.

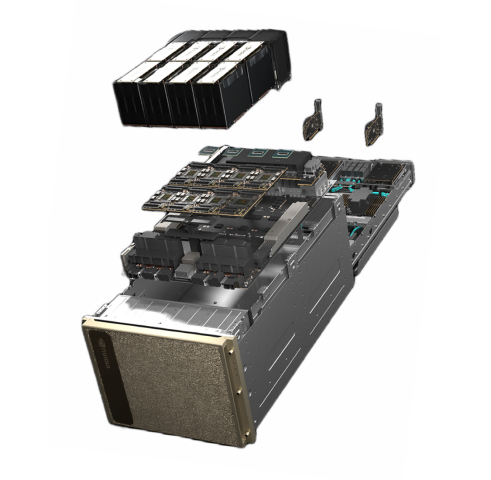

Training LLMs is a highly resource-intensive task that requires substantial hardware resources. Distributed training on GPU clusters, such as NVIDIA H100, has become essential for accelerating the training process and efficiently handling large datasets.

Althought training LLMs on a single node is technically feasible, several limitations make this approach impractical:

- Extended Training Time: Training on a single node significantly increases the duration of each training cycle, making it inefficient for large-scale models.

- Hardware Limitations: Single-node systems often lack the memory and processing power necessary to handle extremely large models. For instance, models exceeding 70 billion parameters or datasets with over 37,000 samples may exceed the available GPU memory and storage capacity of a single machine.

- Scalability Issues: As model size and dataset complexity increase, single-node training struggles to efficiently utilize resources, leading to bottlenecks and suboptimal performance.

These challenges are effectively addressed by utilizing a multi-node (cluster) training setup, which distributes computational workloads across multiple GPUs and accelerates training while ensuring scalability. This approach enables:

- Parallel Processing: Distributing model training across multiple nodes reduces processing time and optimizes resource utilization.

- Handling Large Models & Datasets: Multi-node setups can accommodate LLMs with billions of parameters by splitting the workload across multiple GPUs and nodes.

- Improved Fault Tolerance & Flexibility: Cluster computing provides redundancy and enables better handling of system failures, ensuring training stability.

By leveraging a multi-node Slurm cluster, organizations and researchers can efficiently train LLMs while overcoming the constraints of single-node training.

3. SLURM – The Backbone of High-Performance Computing for AI

As AI projects continue to grow in complexity and scale, the demand for high-performance computing (HPC) environments is increasing rapidly. This expansion requires efficient resource management—a challenge that SLURM (Simple Linux Utility for Resource Management) is designed to address effectively.

SLURM acts as the central nervous system of an HPC environment by enabling AI engineers to maximize computing cluster performance and tackle the most demanding AI workloads. It ensures:

- Optimized Task Distribution: Workloads are efficiently allocated across computing nodes to maintain performance balance.

- Intelligent Resource Management: Critical resources such as CPU cores, memory, and specialized hardware like GPUs are dynamically assigned to maximize efficiency.

- Scalability & Adaptability: SLURM reallocates resources as needed, ensuring smooth scalability and efficient workload execution.

By leveraging SLURM, AI researchers and engineers can harness the full power of distributed computing, enabling faster and more efficient training of Large Language Models (LLMs) and other complex AI applications.

4. Why Deploy SLURM on Kubernetes?

SLURM (Simple Linux Utility for Resource Management) is a widely used job scheduler for High-Performance Computing (HPC), while Kubernetes (K8s) is the leading container orchestration platform for managing distributed workloads. Combining SLURM with Kubernetes offers several advantages:

- Enhanced Scalability & Dynamic Resource Allocation: Kubernetes enables auto-scaling of compute resources based on workload demand, dynamically provisioning or deallocating nodes as needed. Unlike traditional SLURM clusters, which are often static, running SLURM on K8s allows for on-demand scaling, optimizing resource utilization.

- Improved Containerized Workflows & Portability: AI/ML and HPC workloads increasingly rely on containerized environments (e.g., Docker, Singularity). Kubernetes provides native support for containers, making it easier to package and deploy SLURM workloads across multi-cloud and hybrid environments.

- Efficient Multi-Tenancy & Isolation: Kubernetes supports namespace-based isolation, enabling multiple teams to run SLURM jobs securely on shared infrastructure. Resource quotas and limits in K8s help ensure fair allocation of CPU, GPU, and memory among different workloads.

- Integration with Cloud-Native Ecosystem: Running SLURM on K8s allows integration with cloud-native tools like Prometheus (monitoring), Grafana (visualization), and Argo Workflows (pipeline automation). This enables a modern, observability-driven approach to HPC workload management.

- Cost Optimization for Cloud-Based HPC: Traditional SLURM clusters often require dedicated hardware, leading to underutilization when workloads are low. With Kubernetes, organizations can dynamically spin up and terminate cloud-based nodes, reducing unnecessary costs while ensuring peak performance during intensive computational workloads.