An API call away

A fast inference at play

Get started with up to 100 million free tokens to access the latest models and scale effortlessly

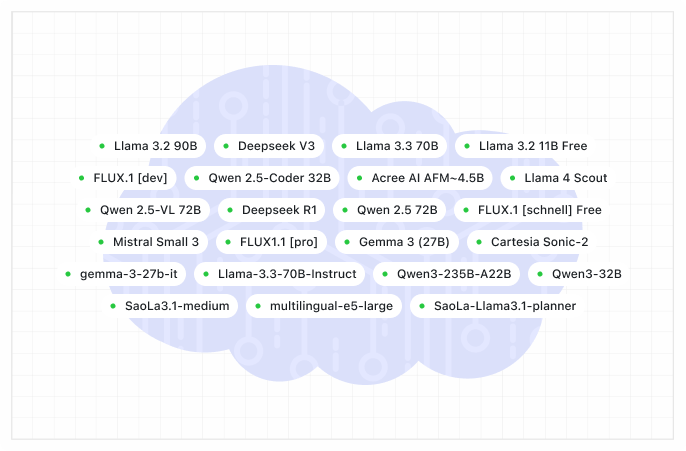

Leverage open-source and FPT’s specialized multimodal models for chat, code, and more.

Easily migrate from closed-source solutions via OpenAI-compatible APIs.

With minimal infrastructure changes, you can set up the service in hours, boosting productivity and reducing setup time.

Prevents companies from overpaying for unused resources, as pricing based on actual usage

Enables uninterrupted service at all times, even with large datasets or fluctuating demand.

Time to first token under

Powered by thousands of NVIDIA Hopper

than hyperscalers

Optimize your performance by deploying & integrating in one steamlined workflow

FPT delivers the infrastructure, tooling, & expertise needed with the most reasonable price